The Stealer Log Ecosystem: Processing Millions of Credentials a Day

Introduction

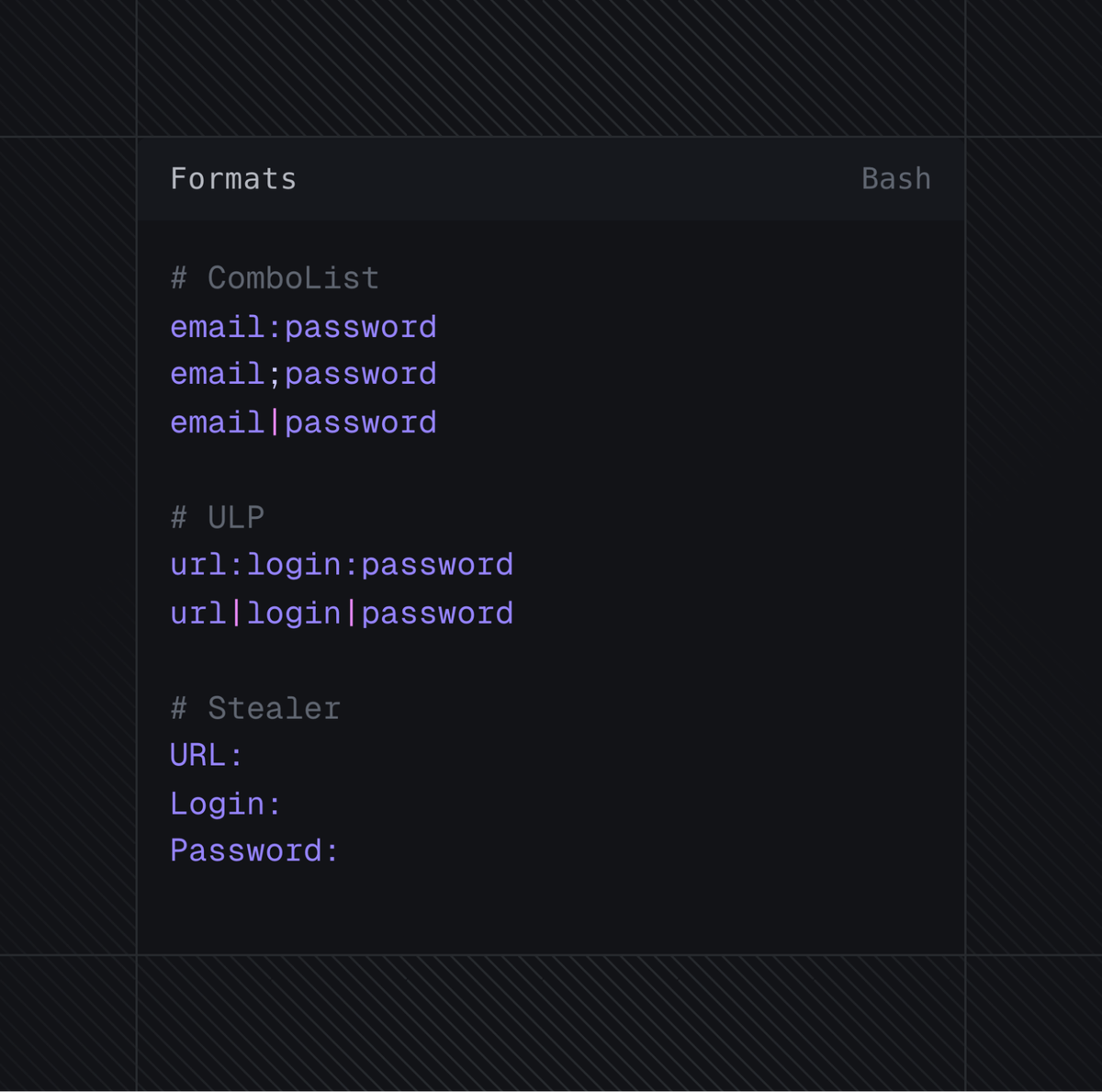

Over the last several years, the stealer ecosystem has evolved in several aspects, from the malware families to the platforms used. Close to a year ago, we began monitoring several of these platforms and building a system to ingest the data shared in the hopes of helping the victims. We've shared this data with Have I Been Pwned, aiming to help the victims of infostealers.

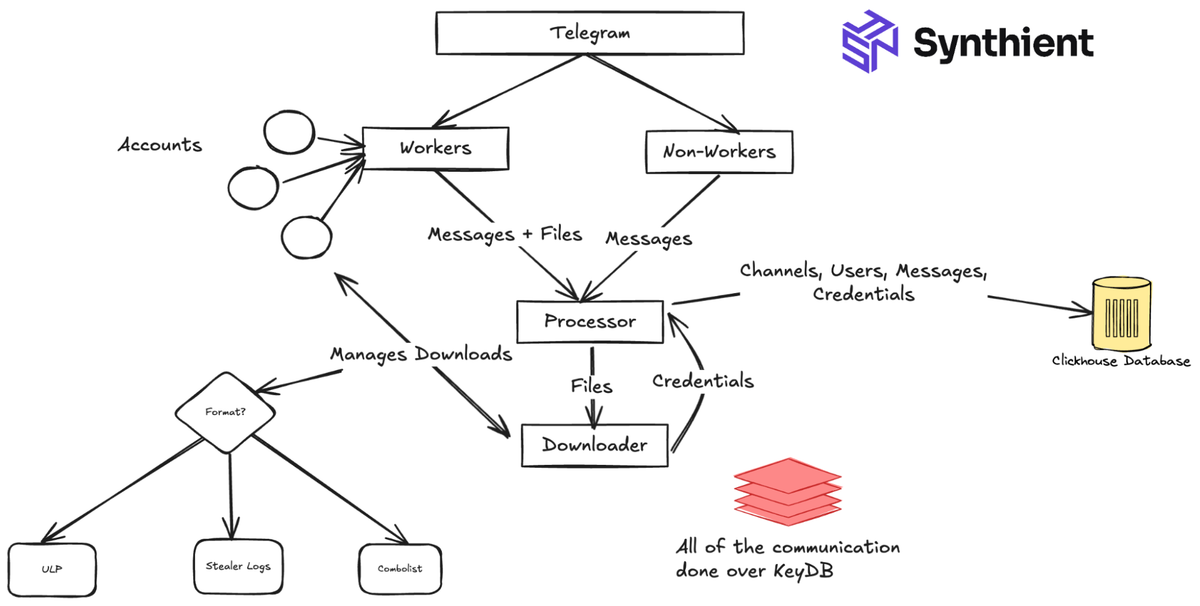

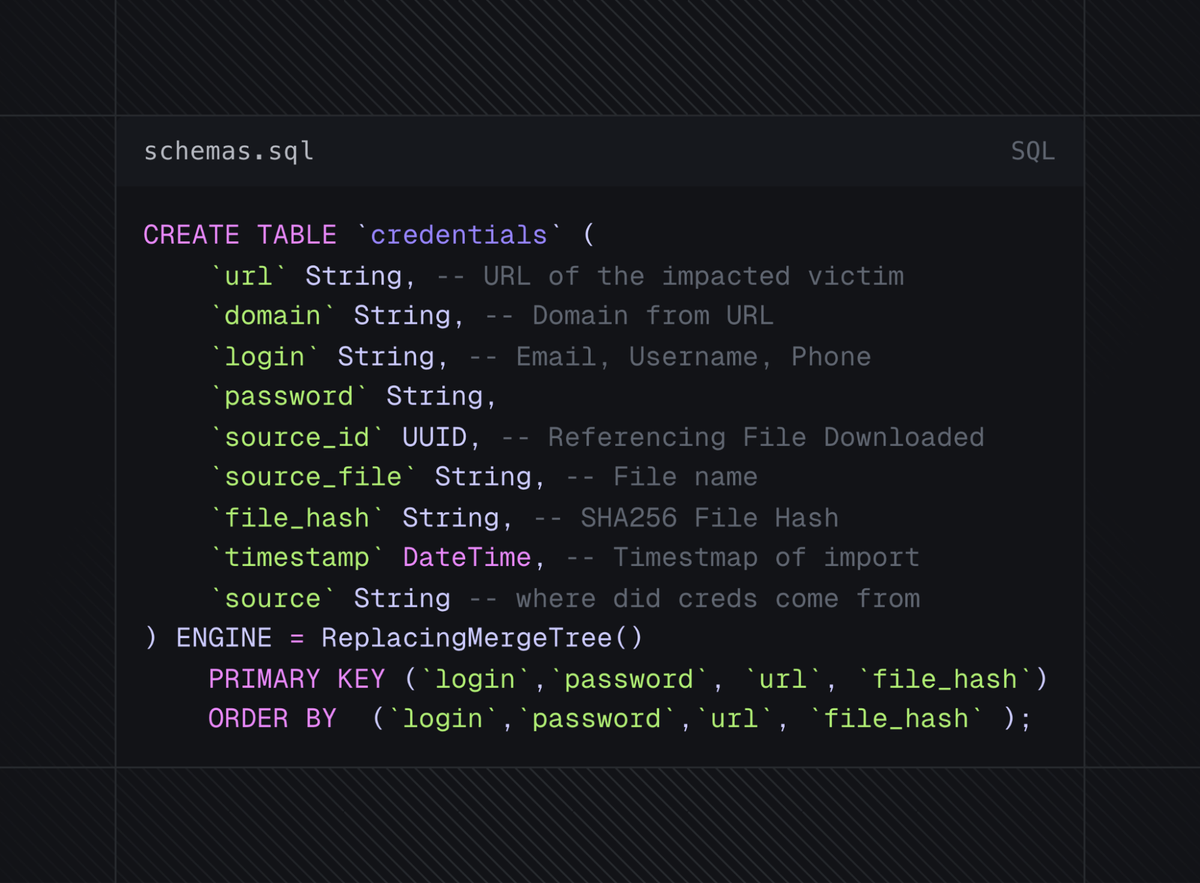

Designing Our System

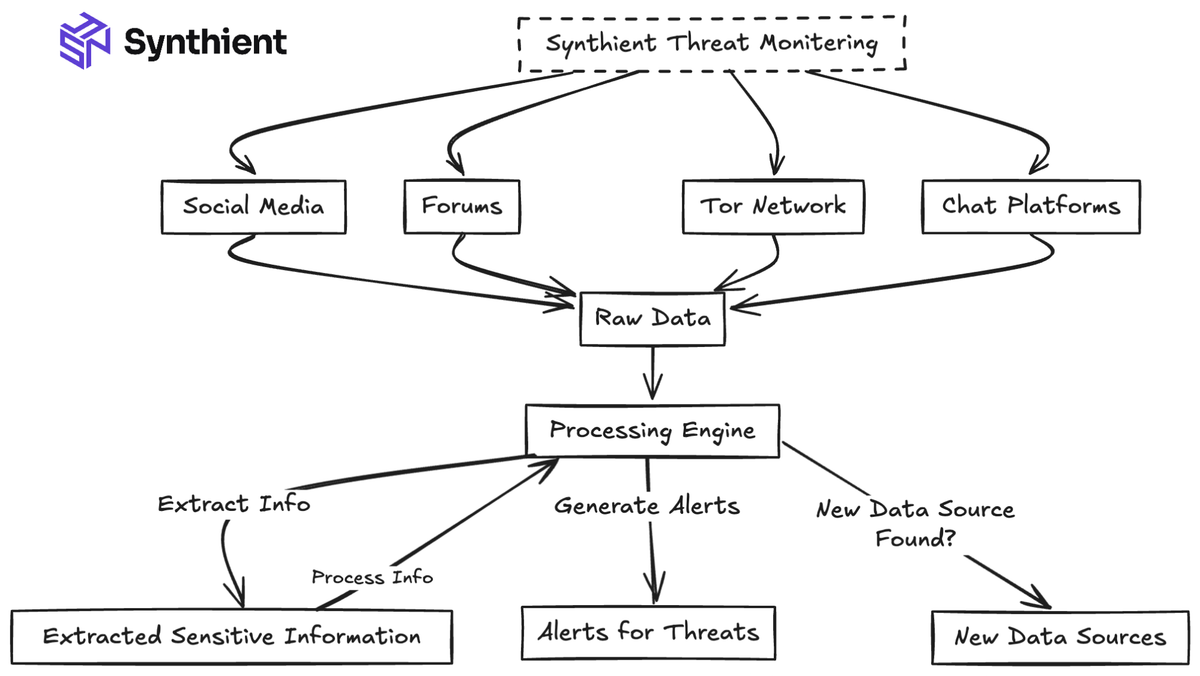

When we designed our system, we wanted to build a competitor to the largest threat intelligence platforms. We aimed to capture as much unique data as possible and achieve as close to real-time alerting as possible. We wanted to monitor the following sources for differing reasons, as they all provided value.

- Telegram: One of the primary data drivers within the stealer log ecosystem. Contributed Millions of unique credentials at its peak within a single day.

- Forums: These often included file-sharing links, including, but not limited to, combolists, stealer logs, or database dumps.

- Social Media Sites: Provided data sources we may have missed, often Telegram links to other groups or channels that shared combo lists or stealer logs.

- Tor Network: Concluded that we did not want to scrape due to a lack of data.

Fig 1: An early prototype of the initial project

We would discover that Telegram was the largest data driver, with a single Telegram account able to ingest as many as 50 million credentials in a single day. For this reason we will focus this blogpost on Telegram and less on the other platforms.

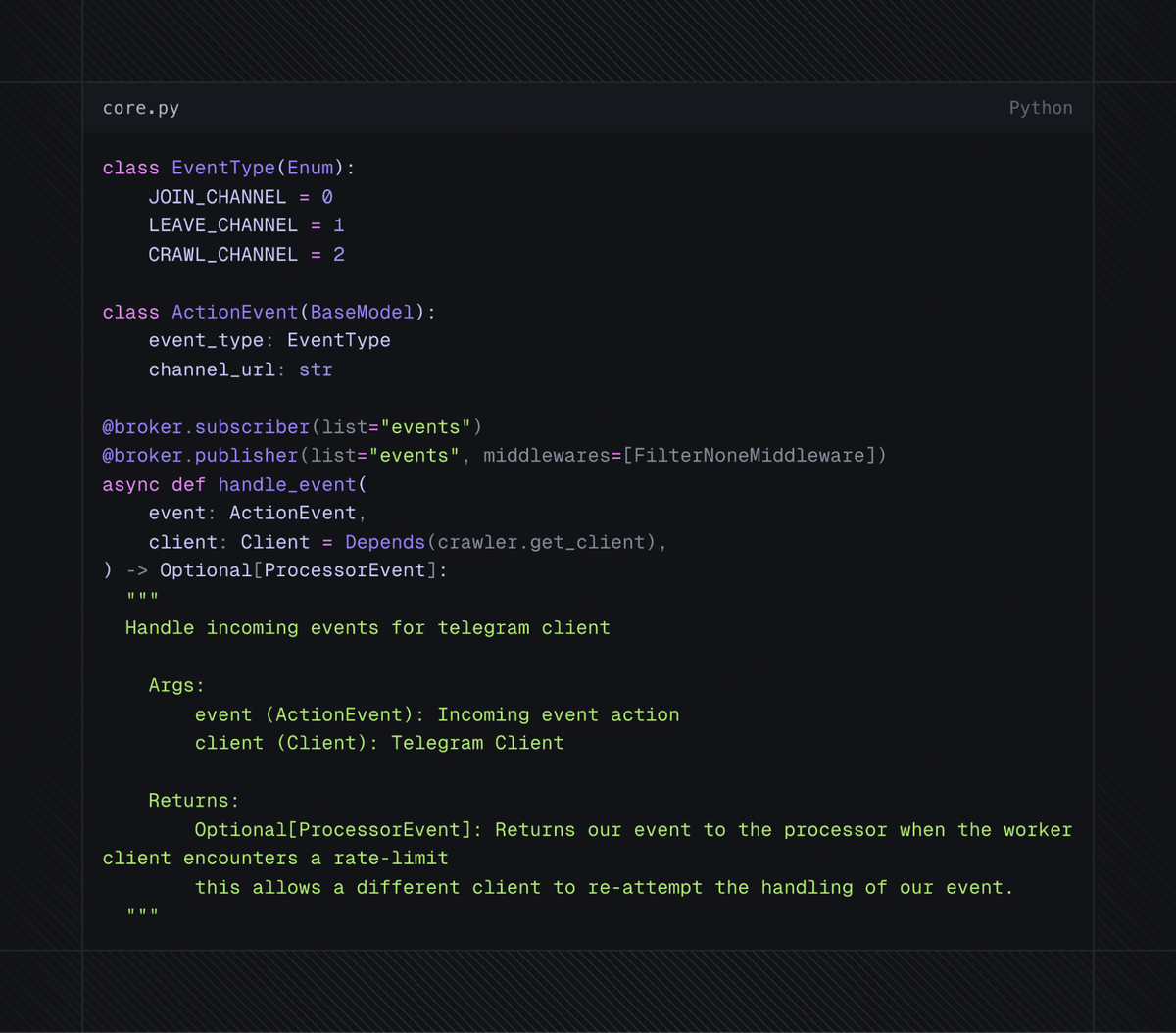

Crawling Telegram

Before we discuss the technical details of building our system, we must provide some background on the stealer scene itself, which is critical to understanding the design decisions made.

Understanding the Stealer Log Ecosystem

The stealer log ecosystem is split into multiple different groups, with the main ones being: